Listen to the post summary:

My website resembles a well-tended garden, with original content that flourishes with each visitor. However, with the advancement of AI tools skilled in extracting data from websites, I've recognized the need to bolster my site's defenses to block these unwanted extractions. Through my experience, I've gathered strategies to protect your website from AI scraping effectively. Let's go through some steps to protect your site. I'll guide you on implementing robots.txt directives, setting up CAPTCHA challenges, and additional methods to ensure your content remains exclusively on your domain. It's all about maintaining the sanctity of your online realm, making sure it's the human visitors who reap the benefits of your hard work.

In the spirit of keeping your digital haven safe, remember, “A sturdy gate ensures that only the welcome can appreciate the garden within.”

Key Takeaways

Protecting my website from AI scrapers is a continuous battle that demands attention and proactive strategies. I've found that effectively configuring my robots.txt file, setting up CAPTCHA, identifying and blocking known AI scraper tools, controlling who can access my content, and frequently updating security protocols are crucial strategies. Adding legal protections provides another defense layer, but staying vigilant and technically sharp is the best way to keep my content secure and uphold my site's value for visitors.

Remember to keep your website's defenses up to date, as methods for data scraping are constantly advancing. Regularly review your security settings and be ready to adapt to new challenges to keep your content safe.

Understanding AI Web Scraping

As we approach the topic of AI web scraping, it's crucial to recognize the ethical implications of this practice. I'll evaluate the potential risks and benefits, ensuring that we establish a framework for ethical conduct in AI data collection. After that, I'll explore the technical countermeasures available to website owners seeking to protect their content from unauthorized AI scraping.

As we approach the topic of AI web scraping, it's crucial to recognize the ethical implications of this practice. I'll evaluate the potential risks and benefits, ensuring that we establish a framework for ethical conduct in AI data collection. After that, I'll explore the technical countermeasures available to website owners seeking to protect their content from unauthorized AI scraping.

Scraping Ethical Concerns

Understanding the Ethical Dimensions of AI Content Scraping

Why should you be concerned about the ethical aspects of AI tools extracting content from your website? When examining this topic, it's vital to look at the complexity of data privacy. Unregulated AI scraping can lead to the unauthorized collection of proprietary information, which might infringe on the intellectual property of those who create content. It's also important to comply with laws that control how data is gathered and used. These laws aim to shield individuals and companies from privacy breaches and the misuse of their information. Being up to date with these regulations is necessary to keep your website content safe and to ensure your practices are ethically sound as technology advances.

Countermeasures for Scraping

To prevent automated systems from harvesting data from my website, I make routine adjustments to the robots.txt file. This careful practice lets me define which parts of my website are accessible to bots like GPTBot. By continuously updating these instructions, I protect my website content from unauthorized extraction by automated tools.

In doing so, I'm not just following a technical routine; I'm taking a stand to safeguard the value and privacy of the information I've worked hard to create. As webmasters, we must be vigilant and proactive to secure our digital properties users trust-essential off-limits path.

Remember, a well-maintained robots.txt file is a simple yet effective layer of defense against the relentless attempts of data scrapers.

Update Robots.txt Regularly

Maintaining the security of your website's content means regularly reviewing and updating your robots.txt file. This is how I do it effectively:

- Set a regular schedule for updates.

- Apply the best methods for specifying which parts of your site user-agents (like web crawlers) can access.

- Keep an eye on the latest developments in AI scraping tools to stay ahead of potential security risks.

- Make necessary adjustments to the paths that are off-limits to ensure that your content remains protected from unauthorized access.

Why Update Your Robots.txt?

Updating your robots.txt file is a simple yet powerful way to safeguard your website. It tells search engines and other web crawlers which pages or sections of your site should not be accessed or indexed. This can help prevent unwanted scraping and can be part of a larger strategy to protect your site's content.

Remember, as new types of web crawlers emerge, staying vigilant and adapting your robots.txt file is a smart move. A well-maintained robots.txt file is critical to your website's overall security strategy.

Utilizing Robots.txt Effectively

To protect your website from unwanted automated data collection, let's discuss how to update the robots.txt file carefully. You can instruct certain web crawlers, such as OpenAI's GPTBot, to either access or bypass your site content by creating specific user-agent rules. By setting up these parameters with attention to detail, you gain precise control over which parts of your site can be indexed or ignored by different AI systems.

To protect your website from unwanted automated data collection, let's discuss how to update the robots.txt file carefully. You can instruct certain web crawlers, such as OpenAI's GPTBot, to either access or bypass your site content by creating specific user-agent rules. By setting up these parameters with attention to detail, you gain precise control over which parts of your site can be indexed or ignored by different AI systems.

Edit Robots.Txt Correctly

To safeguard your website from unwanted AI-powered scraping, it's vital to manage your robots.txt file with care. This step is fundamental in keeping your website's data private and complying with data gathering laws. Here's my guide to do it effectively:

- Find the File: First, I logged into my website's server and searched for the robots.txt file that was already there.

- Review Current Rules: Next, I take a close look at the file to fully grasp the existing rules and what they mean for my site.

- Update with Care: With attention to detail, I adjust or insert new rules to specify what AI systems can and can't do, using ‘Disallow:' to block and ‘Allow:' to give access.

- Verify the Edits: Once I've made changes, I run the updated robots.txt through testers to ensure the rules are correctly written and functioning as intended.

By carefully executing these steps, I update my robots.txt file to keep my site secure while still welcoming search engines that help people find my content.

Implementing CAPTCHA Verification

Turning our attention to CAPTCHA verification, this method serves as a solid barrier against unauthorized automated data harvesting. It operates by distinguishing genuine human activity from that of automated software, effectively blocking unwanted bots while permitting real users access. Nonetheless, when incorporating CAPTCHA, it's vital to consider its potential effects on user interaction. Striking the right balance is key to ensuring that your website remains user-friendly.

CAPTCHA Effectiveness

Incorporating CAPTCHA checks is a solid strategy to protect my website from unauthorized content scraping by automated tools. Here's my perspective on why it's an effective measure:

- Complex Challenges: Sophisticated CAPTCHAs pose intricate puzzles that are tough for automated systems but still manageable for people.

- Constant Updates: By frequently refreshing CAPTCHA algorithms, they can outpace the progression of AI that could otherwise sidestep unchanging systems.

- Layered Security: When CAPTCHA is used alongside other security measures, it creates a fortified barrier against unauthorized access.

- Vigilance: Monitoring CAPTCHA's performance and success rate can signal when it's time to make adjustments or improvements.

While adding CAPTCHA does bolster security, I always consider the ethical side and aim to keep the impact on users as low as possible. Finding the right balance between robust security and user accessibility is a careful, continuous task.

User Experience Impact

While putting CAPTCHA checks in place, I'm well aware that they can sometimes irritate users, even if they're good at stopping bots that scrape content using AI. My assessment shows that CAPTCHAs are effective at keeping these bots at bay, which helps manage the flow of website visitors and lowers the chances of content being copied without permission. Nevertheless, it's vital to use this tool wisely to prevent driving away the people who visit your site. It's all about finding the right balance between making your content easy to get to and protecting it against unwanted AI scraping. Too many CAPTCHA tests can push away just as many real users as bots. I use CAPTCHAs in areas where scraping is most likely to happen while keeping the rest of the site user-friendly. My goal is to offer a great experience for site visitors while also keeping the site's content secure from any unauthorized scraping by AI.

Blocking Specific AI Crawlers

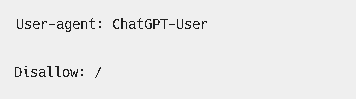

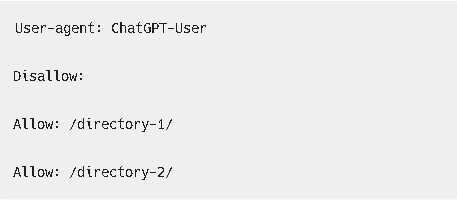

As someone who runs a website, I have the ability to block certain AI crawlers, like OpenAI's GPTBot, to stop them from copying content from my site. This step is not just about stopping unauthorized collection of my content, but it's also about respecting ethical standards and legal rules regarding content use. Here's how I approach it:

- Modify

robots.txt: I adjust this file with specific instructions for AI crawlers outlining what parts of my site they're barred from.

User-agent: GPTBot

Disallow: /

User-agent: ChatGPT-User

Disallow: /

User-agent: CCBot

Disallow: /

- Check Server Logs: I make it part of my routine to go through my server's logs to spot any AI crawler activity that seems out of place.

- Set Up CAPTCHAs: On parts of my website where users interact, I use CAPTCHAs. These tests are great at telling real people apart from automated bots.

- Block Certain IP Addresses: When I need to, I block the IP addresses that I know are tied to AI crawlers to keep them away from my site.

By doing these things, I protect my content and make sure I'm following the rules related to data privacy and intellectual property.

Managing Content Accessibility

Protecting Your Website Content from Unauthorized Scraping

To address the concerns of content scraping, let's discuss effective methods for controlling who can access your website's content. It's vital to restrict bot entry, and I'll outline specific techniques to prevent these automated systems from copying or indexing your site materials. This will involve technical changes and careful setting of access control measures.

Protecting Your Website Content

For those who manage a website, ensuring that your content remains exclusive and protected from automatic scraping systems is a key concern. Implementing specific technical measures can help you control who has the ability to access and index your website's content.

You might consider adjusting your robots.txt file to instruct search engine bots which parts of your site should not be accessed. Employing CAPTCHA systems can also deter bots without hindering human users. For a more sophisticated approach, you might implement server-side checks to discern between legitimate visitors and potential scrapers.

Remember, the integrity and exclusivity of your content are paramount. By taking proactive steps to secure your site, you maintain control over your content and its distribution. After all, the content you create is a reflection of your brand and should be safeguarded with care.

Limiting Bot Access

Limiting Bot Access

I've discovered that taking specific steps can greatly lower the risk of automated systems harvesting content from my site. Here's how I approach it:

- Adjusting Robots.txt: I fine-tune my

robots.txtfile to control bot access, keeping in mind the legal aspects of scraping and data privacy concerns. - Implementing Rate Limits: By introducing rate limits on my server, I can curb the potential disruptive effects of bot traffic.

- Applying API Controls: I share as little information as necessary through APIs and require proper authentication to restrict entry.

- Using Content Delivery Networks: Employing CDNs that come with bot management capabilities allows me to manage who accesses my content and safeguard it effectively.

Taking these steps forms a strong line of defense against unauthorized harvesting of content by automated tools.

Content Scraping Prevention

After updating my robots.txt file, I'm now focusing on measures to prevent content scraping, ensuring my website remains accessible yet secure. I'm examining the technical aspects of scraping, its legal consequences, and the importance of protecting user data from sophisticated AI scraping methods.

| Strategy | Description |

|---|---|

| Variable Content Delivery | Provide different content to automated tools than to human visitors. |

| User Activity Monitoring | Check for behaviors that might indicate scraping. |

| Access Restrictions | Control how often users can access content and block suspicious IP addresses. |

By carefully putting these strategies into place, I'm not just protecting my website's content, but I'm also keeping user information private and secure. This is a deliberate plan to manage my website's content and to deter unauthorized access or misuse by automated tools.

Incorporating these strategies is a smart way to keep ahead of those who might attempt to misuse your hard work. It's like setting up a sophisticated alarm system that not only keeps an eye out for intruders but also respects the privacy of your guests. It's about being proactive rather than reactive in the face of potential threats.

Regularly Updating Security Measures

Setting up initial defenses like tweaking your robots.txt or adding CAPTCHA is a great start, but to effectively guard against advanced AI tools that scrape content, it's vital to continuously refresh your website's security strategies. The tech environment is in a state of constant flux, with AI capabilities becoming more sophisticated and occasionally slipping past older security methods. Therefore, maintaining your website's security requires a strategic, tech-savvy, and systematic approach.

Here's my strategy:

- Routine Security Reviews: I make it a point to conduct security checks at regular intervals to spot any emerging weak spots, ensuring my safeguards are up to date and effective.

- Staying on Top of Updates: I keep abreast of the newest security patches and make sure all the software elements of my site are current.

- Adapting Security Measures: I adjust my security settings to tackle specific threats, which helps keep a healthy balance between protecting content and ensuring it's accessible for the right reasons.

- Traffic Analysis and Reporting: By keeping an eye on how traffic flows to my site and scrutinizing the access logs, I'm able to quickly identify and act upon suspicious behavior that might indicate an attempt at AI scraping.

Securing my website is not a set-it-and-forget-it affair; it's a continuous challenge to fend off those with ill intentions. By remaining alert and proactive about security, I'm safeguarding not just my site's content but also the privacy of those who visit.

Exploring Legal Protections

Navigating legal complexities, I'm examining copyright laws and regulations against unauthorized AI scraping to protect my website. It's essential to take a systematic approach to understand how national and international copyright laws affect the material on my site. I have also reviewed the Digital Millennium Copyright Act (DMCA) to see how it can defend my content from AI-driven infringements.

Assessing the terms of use for AI tools is a responsible step to ensure they don't overreach in their rights to use and gather data from websites. This attention to detail is key to preserving my site's user experience and preventing the misuse of my content, which could diminish my brand's impact and reduce visitor engagement.

Additionally, I'm considering technical strategies like implementing strict access controls and constant traffic analysis to identify and mitigate scraping attempts. A combination of legal measures and technical safeguards is my plan to maintain my website's distinctiveness and protect the creative effort behind it.

Frequently Asked Questions

If I Block AI Tools From Scraping My Website, Will It Affect My Site's Visibility or Ranking on Other Search Engines Like Google or Bing?

I'm considering whether preventing AI tools from scraping my website might change how well my site performs on search engines such as Google or Bing. It's important to clear up any confusion about online visibility; these search engines use unique algorithms for ranking. They don't depend exclusively on the indexing by AI tools. My aim is to keep my content protected and still retain a good position in search results. In practice, this means finding a careful balance between safeguarding my website's content and achieving solid SEO results.

How Can I Differentiate Between Legitimate Search Engine Crawlers and AI Scrapers When Analyzing My Website's Traffic?

To tell apart legitimate search engine crawlers from unauthorized AI scrapers when looking at my website's traffic, I closely examine patterns in user behavior that may suggest automated interactions. To keep out potentially harmful traffic, I apply IP blocking techniques. I also take advantage of bot detection tools, which assist me in pinpointing and controlling unapproved bots. These measures help me safeguard my content while ensuring that my site remains accessible to reputable search engines.

Understanding the difference between genuine and artificial traffic ensures that my website analytics remain accurate and that my content doesn't fall into the wrong hands. As a website owner, it's my responsibility to keep my digital property secure, just as one would protect a physical store from shoplifters. With these strategies in place, I can confidently manage my website's traffic and maintain its integrity.

What Steps Should I Take if I Notice That My Content Has Already Been Scraped by an AI Tool Without My Permission?

Upon discovering that my content has been used by an AI tool without my consent, the first step is to meticulously record every instance of this violation. Next, I would attempt to reclaim my content by contacting the party responsible, or if needed, by issuing DMCA takedown requests. Should these measures fail to resolve the issue, considering legal recourse is an option. Additionally, it's beneficial to inform the public about the unauthorized use of my work, promoting the ethical usage of AI tools. Vigilance and immediate action are key in safeguarding one's creative rights online.

Remember: Protecting your creative work is not just a right; it's a responsibility.

Are There Any Industry Standards or Best Practices for Watermarking My Content to Indicate That It Shouldn't Be Used for TrAIning AI Models?

I'm currently reviewing methods for protecting my content from unauthorized use in training AI models. One approach is to use digital watermarking and content fingerprinting, which insert invisible markers or distinctive codes into my work. When combined with explicit policies regarding use, these strategies serve as a sign that my materials should not be used for training AI models. The community is still working towards a common set of guidelines on the matter, so I'm staying informed about the latest strategies to ensure my work is properly safeguarded.

“Protecting intellectual property in an age where data is constantly fed into algorithms is a shared concern for creators. It's wise to be proactive and informed.”

Should AI tools develop the capability to bypass CAPTCHA, I would need to adopt more sophisticated security strategies to safeguard my website from unauthorized data extraction. One effective method is Behavioral Biometrics, which monitors irregularities in how users interact with the site. This can help differentiate between human visitors and potential automated scrapers.

Another layer of protection involves Fingerprint Analysis. This technique evaluates the unique attributes of a device and its browser, such as the operating system, screen resolution, and installed fonts, to spot inconsistencies typical of bot activity.

To stay one step ahead, I would put into action Adaptive Challenges. These are security checks that can vary in complexity based on the assessed risk, ensuring a dynamic defense that adjusts to the level of threat detected. By employing these advanced methods, I can significantly reinforce my website's security against the latest AI-powered scraping tools.

What is AI scraping protection in the context of the World Wide Web?

AI scraping protection refers to methods and technologies used to prevent automated bots from harvesting or scraping data from websites without permission. These technologies leverage artificial intelligence capabilities to detect, identify and block such activities.

Why is AI scrapers a threat to intellectual property on the internet?

AI scrapers pose a threat because they can quickly and efficiently collect large amounts of proprietary information published on the web. This data could include copyrighted content, trade secrets, databases or other digital assets that are intended for use solely on the source website.

How does an AI scraper work?

An AI scraper works by simulating human browsing behavior. It visits web pages, identifies relevant information based on pre-defined criteria, then extracts this data for use elsewhere. The sophistication of these tools varies widely; some are capable of navigating complex site structures and evading basic anti-scraping measures.

What techniques are commonly employed in AI scraping protection?

Techniques often employed in AI scraping protection include rate limiting (restricting how many requests an IP address can make within a certain time period), CAPTCHA tests (which challenge users to prove theyre human), user agent analysis (to identify suspicious browser activity), and more advanced machine learning algorithms that can detect unusual patterns indicative of bot behavior.

Can Artificial Intelligence be used in protecting against web scraping activities?

Yes, various forms of artificial intelligence like machine learning algorithms can be utilized for detecting and preventing web scraping. These systems learn from previous instances of bot behavior, allowing them to better anticipate and thwart potential future attacks. They may also implement real-time detection techniques which allow immediate action when suspected bot activity occurs.

My final thoughts on protecting your website from getting scraped by AI tools

Keeping my website safe from unwanted AI scraping is an ongoing effort that requires diligence. I have found that smart use of robots.txt, implementing CAPTCHA, blocking recognized AI scrapers, managing access to content, and consistently updating my security measures are vital steps. While adding legal measures offers an extra layer of protection, remaining alert and technically adept is key to ensuring my content stays within my purview, thus maintaining my website's integrity and the value it offers to those who visit it.

Authorative References

If you want to read some more about protecting your websites from AI Crawlers, then i recomend you take a look at the following post:

- ITPro – AI web scraping: How to protect your business from

- This article discusses the complexities of AI web scraping and the associated risks. It provides insights into how AI can gather data with greater speed and sophistication, analyzing it to produce outputs.

- ITPro Article

- The Authors Guild – Practical Tips for Authors to Protect Their Works from AI Use

- This resource offers practical advice for authors and website owners on how to protect their works from AI use, including using a robots.txt file to block AI web crawlers like OpenAI's GPTBot.

- Authors Guild Tips

- Resolution Digital – Protect Website from AI Content Scraping

- This article provides simple steps to protect your website from scraping and unauthorized usage by AI tools like ChatGPT. It discusses the use of robots.txt files, CAPTCHA implementation, and IP range blocks.

- Resolution Digital Guide

- Octoparse – Web Scraping for Brand Protection and Cybersecurity

- This blog post explores how web scraping can be used for brand protection and cybersecurity. It discusses the use of web scraping tools to find potential infringements and copyright violations.

- Octoparse Article

- ScienceDirect – The war against AI web scraping

- This article from ScienceDirect explores the growing objections to AI web scraping, highlighting the rapid progress in AI and its training on vast data sets of text and other digital content.

- ScienceDirect Article